In this article, I am planning to cover how to Install SUSE Linux Enterprise 10 on Hyper-V, but what make it different than other articles that I will try to cover how to install the Hyper-V Component Integration for Linux as well Inject the Xen Hyper-Visor into the SUSE Linux Enterprise 10 which is running as a guest OS to try to show how to unleash the claimed performance of Linux on Hyper-V. The reason behind me writing this article is that I came across many articles who show you how to install Hyper-V. Then many others which show how to install Linux as a guest OS on hyper-V, but I have not seen any that show you how to do the full install of Linux on hyper-V to include the Linux Component Integration for Hyper-V and Xen Hypervisor. In addition, I have came across many other articles which mention that being not easy such as Fedore Core 8 on Hyper-V not so easy as it seems and MS Hyper-V vs VMware VI3 , but they still have not mention how to do it to let us figure out how hard its. So I decided to try it out and post my experience and try to prove the opposite if possible, but If you want to head to the last few lines to find out the conclusion before going over the full article “yes, its not as easy as I thought Microsoft product would run.” At start I decided to install SUSE Linux Enterprise 10 in a child partition of Hyper-V Beta1 (found in RC1 of Windows Server 2008) and then to try Linux Integration Components for Microsoft Windows Server 2008 Hyper-V (Beta1) – now IC. Then try to inject Xen kernel trying to meet the planned MS installation path for acceptable performance of a Linux Virtual machine on Hyper-V. For this excersize I decided to use a small server that my company had at its lab (shhhhhhh!!!):

·Intel Xeon 2 Core

·4GB RAM

·HD 1TB

·Ethernet 2X1GB network cards

This seemed to be a good testing to install Windows Server 2008 RC1 with Hyper-V and try to run some virtual machines on it. For my testing I had to suffer with the x64 version of SUSE as that the only DVD I had, and did not have the time neither the bandwidth needed to get the 32-bit version of SUSE (Though most of the steps illustrated held the same for 32-bit version of SUSE). In Hyper-V manager, I created a virtual machine with 512 MB RAM, 1 CPU with the only special setting is that I used a network adapter type emulated (Legacy Network Adapter) and not to assign SCSI controller. I have assigned the ISO of SUSE Linux Enterprise 10 SP1 to the DVD virtual and initiated the virtual machine. The installation was spun smooth: the installation program has correctly recognized the network card emulated (DEC 21140), the graphics card emulated (S3 Trio 64) and the rest of the hardware. I only had to move the switch linux vga = 0x314 “auitare” during Setup to set the proper video mode (this is a preventive technique). After that I had available a SUSE server fully functional, capable of accessing the network and also with its beautiful graphical user interface: although GNOME is Not brilliant when it come to performance, it still acceptable. And here I had felt the first signs of satisfaction. At this point I have moved to install the IC to have greater integration between the Linux machine and Hyper-V and to verify the improvement of performance. The IC mainly provide two things: ·Support for synthetic devices and in particular for network cards and SCSI controller. In this way Hyper-V can exploit the vmbus for dialogue with the parent partition and thus increase performance ·Hypercall adapter. This is a thin layer software that position “under” the Xen amended kernel that translates Xen Virtualization calls to functions that understandable to Hyper-V (hypercall) Warning: Linux Integration Components for Microsoft Windows Server 2008 Hyper-V At the current versions only support the following Linux verisons:

·SUSE Linux Enterprise Server 10 SP1 (x86)

·SUSE Linux Enterprise Server 10 SP1 (x64)

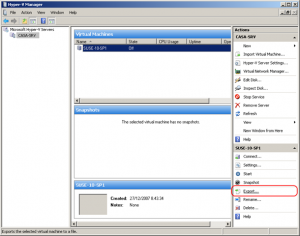

The support is provided only through this newsgroup: microsoft.bet.windowsserver.hyper-v.linux Before installing the IC for Linux we exported (special menu item in Hyper-V Manager) VM in order to have a “rescue copy” in case something goes wrong and also to have two virtual machines that diverge “only” the presence or absence of IC.

(Menu Export in Hyper-V Manager)

The first step of the IC is to … read the attached document (Integration Components for Linux Read Me.docx) that contains a description of all the steps to be done. I know, I know … most systems administrators prefers starting by inserting the CD directly – virtual or not – and see how everything crashes, but I decided to save my self the headache and read : the first read me. I assure you that in this case a prior reading of readme helps …

Choosing the X64 version operating system has been proven to be the most problematic: In fact, the Xen Kernel included in x64-based version of SUSE LE 10 SP1 does not start after the installation of the hypercall adapter x2v (the component that translates Xen calls into into Hyper-V calls).

Then you must run patching for the kernel. The special patches are available on the ISO containing the IC.

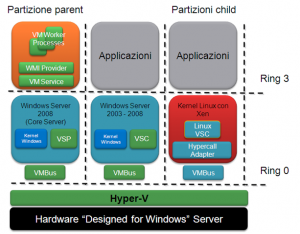

The seceret behind the Xen kernel with hyper-V is that one of the main objectives of Hyper-V is to be a virtualization platform that is “open” as much as possible, and able to perform at best for the different operating systems.

You can run Linux on Hyper-V without any modification (the list of versions of Linux supported by Microsoft in production will be made public with the RTM of Hyper-V). This will however use the device emulated (basically the same as Virtual Server 2005 R2) and performances are not particularly bright. This method is what unfortunetly most documented on the web for being so easy and most administrators being not aware of the required modification to boast the performance which is the aim of this article. To improve the performance of virtual machines run in Linux partitions child of Hyper-V, Microsoft and XenSource (now Citrix) are working together to develop device drivers synthetic the vmbus and a hypercall adapter for use with Linux kernel Xen-enabled (child) . In particular hypercall adapter is a thin layer of software that arises under the Xen kernel (child) and translates calls to Xen virtualization subsystem into calls understandable by Hyper-V.

(Architecture of Integration Component for Linux with Hypercall Adapter)

Let me briefly cover the procedure that I followed to install the IC in SUSE LE 10 SP1 x64 (For full descriptions look at the documentation included with the IC):

1. Copying the contents of the ISO file with the IC for Linux into a directory on your system. Using Hyper-V Manager assign the ISO file to a virtual DVD. Then run the following on your SUSE virtual machine to mount the virtual DVD to SUSE (eg: mount /dev/cdrom /mnt/cdrom). Copy at this point the content of ISO file in the /opt/linux_ic (which you have to create)

$ mkdir /opt/linux_ic

$cp /mnt/cdrom/* /opt/linux_ic –R

2. Find the development tools and kernel sources in your SUSE packages which have the rpm format and then you can install these development tools and kernel sources using the command rpm or if you prefer Yast2

3. To Instal the Xen kernel using command line. For the x64 platform use the following command (assuming that the ISO for your SUSE is mounted in /mnt/cdrom):

$ rpm -ivh /mnt/cdrom/suse/x86_64/kernel-xen-2.6.16.46-0.12.x86_64.rpm

4. To apply the required patches to the Xen kernel which for Xen X64 are present in the IC ISO and at this point we already had copied to the file system of SUSE. Run the following commands.

$ cd /usr/src/linux-2.6.16.46-0.12

$ cp /opt/linux_ic/patch/x2v-x64-sles.patch .

$ patch -l -p1 < x2v-x64-sles.patch

5. Rebuilding the Xen kernel and “install” it in place of the existing kernel

$ cp /boot/config-2.6.16.46-0.12-xen ./.config

$ make oldconfig

$ make vmlinuz

6. Install the hypercall adapter

At this point, you can install the hypercall adapter with the following commands

$ cd /opt/linux_ic

$ perl setup.pl x2v /boot/grub/menu.lst

It is necessary at this point to restart the virtual machine and SUSE will load the amended Xen kernel

7. Installing the device driver synthetic (VSC) and the vmbus

The last operation is the installation of synthetic device drivers (VSC):

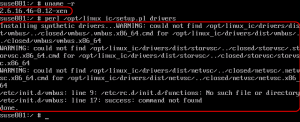

During the installation of VSC I received three warnings and two errors in the script startup vmbus(/etc/init.d/vmbus). You can safely ignore both the warning and the errors showing on the startup of vmbus which are the “problems of youth” script (it’s still a Beta1), but not in any way it jeopardize the success of the installation. A final restart to the SUSE VM close the phase of the IC Installation.

(Warning and error messages)

I believe error messages caused by vmbus are linked to the fact that the startup script is written for RedHat and not for SUSE.

Indeed in the script the row /etc/init.d/functions for SUSE should be /etc/rc.status. Similarly, instead of calling success, as fixed in RedHat, should be called, SUSE, rc_status -v.

If someone wants to try to make these changes to the script before you run it and put in a comment to this post the results, that would be grateful.

8. Reconfiguration of Graphics:

The installation of IC causes the loss of Server X configuration that can be restored by running SaX2.

At this point we shut down the virtual machine SUSE, and then from the Hyper-V Manager:

I removed the VM network adapter emulated (Legacy Network Adapter) from the hardware configuration and we added a network card-type synthetic (Network Adapter) instead of it

As well, I added a SCSI controller and connected it to the virtual hard disk

Re-starting the virtual machine with SUSE we found the new hardware (based on synthetic devices) to be fully functional.

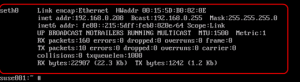

(Output of ifconfig: seth0 is the card which based on device synthetic)

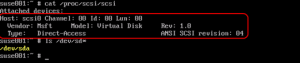

(SCSI Devices summary: Note the vendor MSFT)

The configuration of the Integration Component for Linux is “all here,” and remember that we are only at Beta.

After the test we had run few load stress tests on both virtual machines the one with IC & Xen Kernel installed & the unmodified VM. The Performance of the one with IC & Xen Kernel seemed to perform 25 – 35% better than the non modified one. Its a great improvement. Though the stress tool that we had are too basic to conclude the result. Though you really can feel the difference. If hyper-V end up to be your choice it worth considering IC & Xen Kernel injection if you are using Linux VMs.

Yes As I said in the begining getting IC & Xen Kernel correctly working inside the Virtual Machines on Hyper-V is not as easy as I expected. Though if Hyper-V is going to be your virtualization platform & you plan for Linux VMs you better be ready to put up with it.

I hope this article was helpfull for everyone and people will leave comment on things I missed or things they would like to add to it. Share the knowledge!!!!!!!!

WhataVM,

fedora 8 on hyper-v is significantly easier. Set the nic to legacy adapter, Load disk (or iso) and proceed as normal. … simple

Did you try to connect between 2 Virtual Machines via VMBUs ?

Good day, fantastic content!! I bought you actually book-marked. With thanks and greatest wishes